***

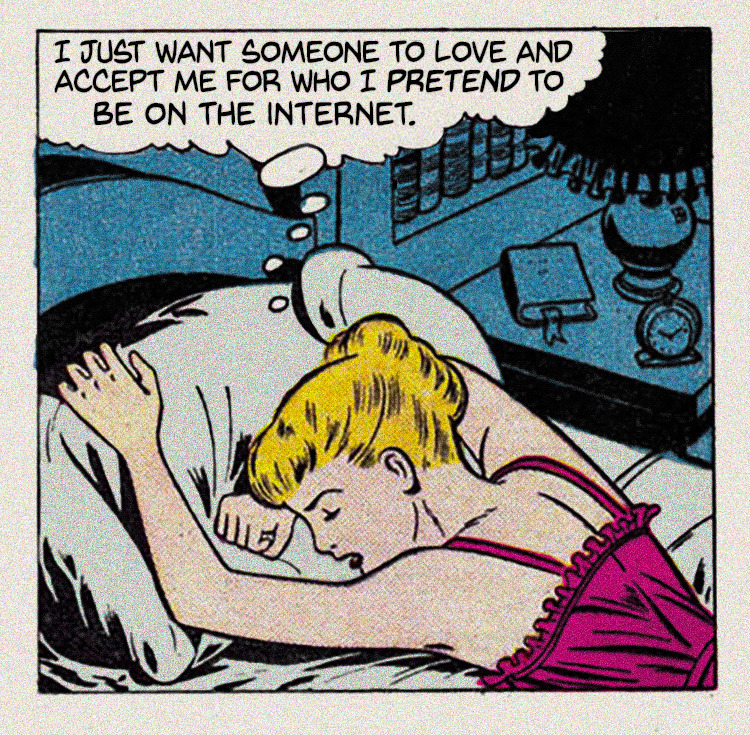

Shocking recent studies (not really) say that 95.9% of all people on the internet are pretending to be someone they’re not.

Whether they’re blowing pollyanna bubbles in the direction of any and all who will listen to them about how wonderful, carefree and titillating their lives are, to how stimulating and interesting of a person they are because they’re an alpha male, a female empath or an other know-it-all, which of course none of them are – to how deep and fascinating their multiple personalities can be depending on which one you might be interested in at any given moment – one thing is for certain, and that is that they are not who they portray themselves to be. In other words, they’re either sociopaths, insane, or just bullshitters. Some of them soft bullshitters, some of them hard bullshitters.

And it seems that AI, you know, that technology that most think just invented itself, and day after day expands its’ knowledge, self-educates itself and will sooner rather than later, run the world, has its own bullshitting issues.

RESEARCHERS SAY THERE’S A VULGAR BUT MORE ACCURATE TERM FOR AI HALLUCINATIONS – via futurism.com

It’s not just your imagination — ChatGPT really is spitting out “bullshit,” according to a group of researchers.

In a new paper published in the journal Ethics and Information Technology, a trio of philosophy researchers from the University of Glasgow in Scotland argue that referring to chatbot’s propensity to make crap up shouldn’t be referred to as “hallucinations,” because it’s actually something much less flattering.

Hallucination, as anyone who’s studied psychology or taken psychedelics knows well, is generally defined as seeing or perceiving something that isn’t there. Its use in the context of artificial intelligence is clearly metaphorical, because large language models (LLMs) don’t see or perceive anything at all — and as the Glasgow researchers maintain, that metaphor misses the mark when the concept of “bullshitting” is right there.

“The machines are not trying to communicate something they believe or perceive,” the paper reads. “Their inaccuracy is not due to misperception or hallucination. As we have pointed out, they are not trying to convey information at all. They are bullshitting.”

Calling Bull

At the crux of the assertion from researchers Michael Townsen Hicks, James Humphries, and Joe Slater is philosopher Harry Frankfurt’s hilarious and cutting 2005 epistemology opus “On Bullshit.” As the Glaswegians summarize it, Frankfurt’s general definition of bullshit is “any utterance produced where a speaker has indifference towards the truth of the utterance.” That explanation, in turn, is divided into two “species”: hard bullshit, which occurs when there is an agenda to mislead, or soft bullshit, which is uttered without agenda.

“ChatGPT is at minimum a soft bullshitter or a bullshit machine, because if it is not an agent then it can neither hold any attitudes towards truth nor towards deceiving hearers about its (or, perhaps more properly, its users’) agenda,” the trio writes.

Rather than having any intention or agenda, chatbots have one singular objective: to output human-like text. Citing the lawyer who used ChatGPT to write a legal brief and ended up presenting a bunch of “bogus” legal precedents before the judge, the UG team asserts that LLMs have proven themselves adept bullshitters — and that sort of thing could become more and more dangerous as people keep relying on chatbots to work for them.

“Investors, policymakers, and members of the general public make decisions on how to treat these machines and how to react to them based not on a deep technical understanding of how they work, but on the often metaphorical way in which their abilities and function are communicated,” the researchers proclaim. “Calling their mistakes ‘hallucinations’ isn’t harmless: it lends itself to the confusion that the machines are in some way misperceiving but are nonetheless trying to convey something that they believe or have perceived.”

So just like their inventors, AI can produce some really bogus shit. Who would have thunk it!

Will the hallucinations of AI be called out for what they are? Probably not. Just look at the humans spewing out their hallucinations and bogus bullshit about themselves and hardly a soul calls them out for what they are.

Hard or soft bullshit….it’s all bogus, and it’s mostly what people (and AI) crave.

***

Tonight’s musical offering:

WHOA!

Tubularsock is solidly behind “pollyanna bubbles” when it comes to bullshit. Tubularsock ONLY DEALS in hard core BULLSHIT! It’s a way of life.

With HARD core bullshit Tubularsock doesn’t have to wear high boots as one does when dealing with SOFT bullshit. If you understand the simplicities of the concept.

Oh sure, Tubularsock likes just a little insane sociopathic flavoring to round it all out.

The downfall of AI is simple. IT is being modeled by the “human mind”! YEP, in just a little bit the shit will hit the fan! Rapid fire dumbness!

LikeLiked by 1 person

“Rapid fire dumbness”….too good! Thank you, Tube!

LikeLike